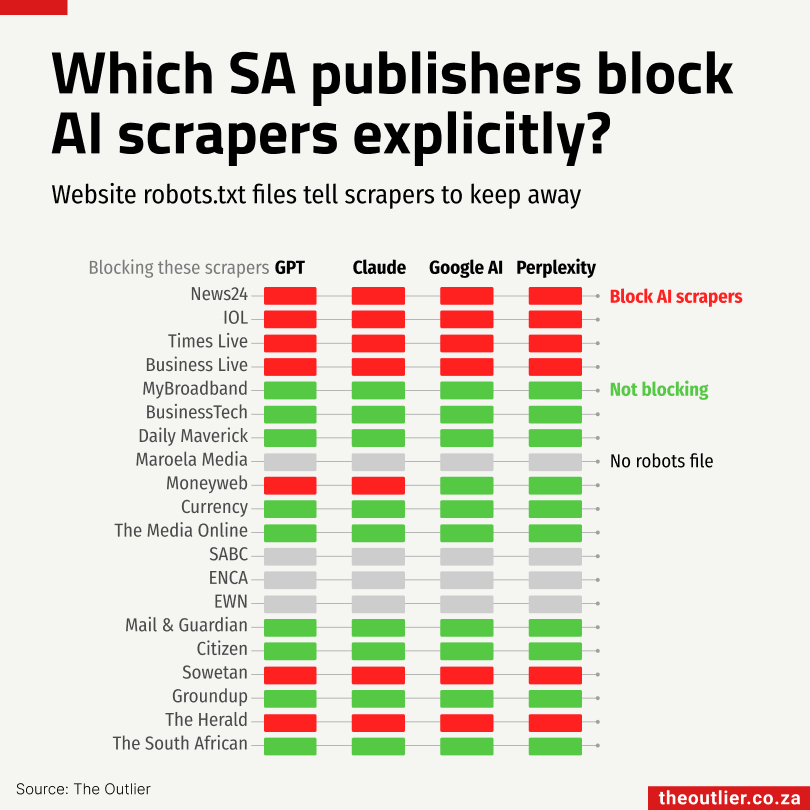

SA’s biggest news sites are blocking AI bots.

News24, IOL, and TimesLIVE, along with their sub-brands, have updated their robots.txt files to block scrapers from OpenAI, Google AI, Perplexity, and Claude. Most smaller publishers haven’t followed suit. Many don’t even have a robots.txt file at all.

So, what is robots.txt? It’s a small text file that tells bots and web crawlers which parts of a site they’re allowed to visit. But it’s not enforceable. It’s more of a polite request. Reputable bots usually listen. Others don’t.

Still, the presence (or absence) of AI-blocking rules in the file is telling. If a publisher hasn’t added them, it likely means AI scraping isn’t a major concern, or hasn’t been considered yet.

There are good reasons for concern. Publishers that invest heavily in original reporting have little incentive to let their work be scraped, paraphrased, and served up in chatbots, especially when that means fewer clicks, less traffic, and no attribution. The costs are real too: sites like Wikipedia have reported massive spikes in bandwidth usage from AI scraping bots.